Looking into Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond paper

Main Model Types

The paper categorized the foundational models into two types.

Encoder-decoder or encoder-only language models and decoder-only language models.

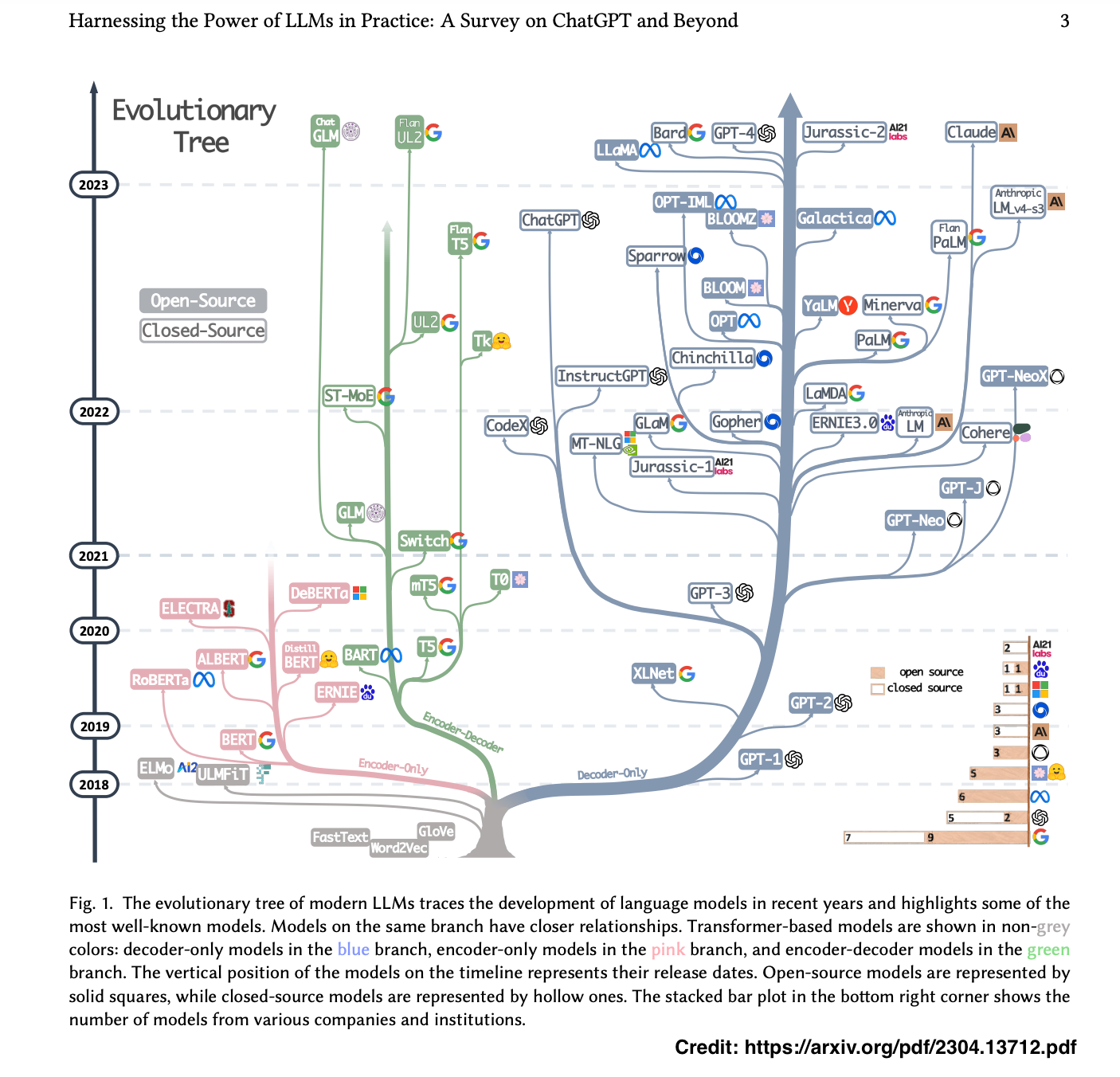

The picture on the top represents the evolutionary tree of the development.

Initially decoder only models were not that much popular, where as encoder (pink colored on the graph above) only models were widely used.

Lately, the situation has reversed and decoders (represented in blue color) takes much of the popularity.

BERT-style including BERT, RoBERTa and T5 are using common training paradigm known as Masked Language Model that predicts a masked word(s) based on the surrounding context.

There are Autoregressive Language Models such as GPT-3, OPT, PaLM and BLOOM, they are trained by generating the next word in a sequence provided the preceding words.

For more information and details please refer to the paper